Decision trees: A machine learning algorithm

From Artificial intelligence to data analytics, almost every modern-day technological application requires the use of machine learning to different extents. For example, when you want to automate robots or any other machine, you need to incorporate machine learning algorithms so that they can perform just like a human and deliver the most accurate outputs. Similarly, machine learning algorithms should be incorporated when a software program has to think like a human and display results based on emotional or logical reasoning.

A machine learning algorithm is a step-by-step explanation of the workflow and different types of statements to be included in the program. For example, when the machine has two sets of input and needs to decide which one to proceed with, it will detect a decision-making statement in the algorithm and implement the control flow accordingly. Several types of machine learning algorithms are practiced at present. However, the most common one is a decision tree. Although it is based on statistics and data structure computing, understanding the trees and their applications is difficult. Besides, if you don't have experience working with data structures, the tasks become more difficult.

Keeping this in mind, we have shared some insights into what a decision tree is, its roles, its importance in machine learning algorithms, and many more such aspects.

What is a decision tree?

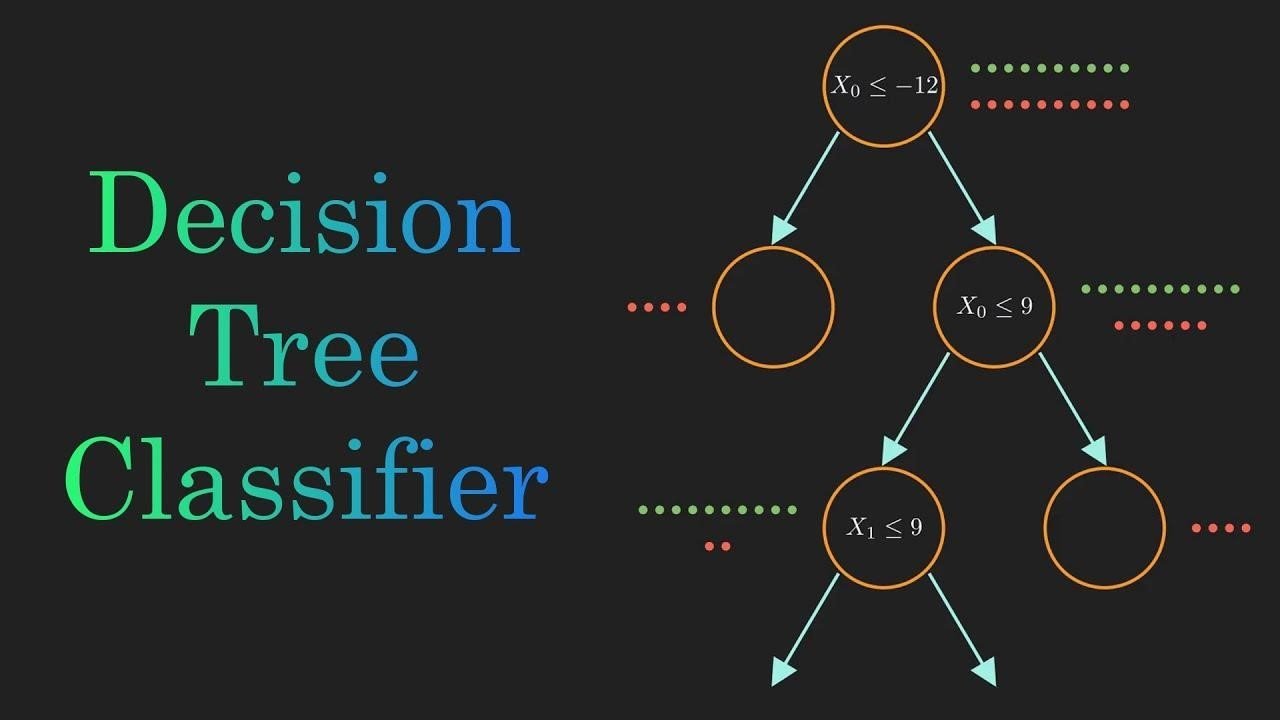

A decision tree is almost like a flowchart used for classification and regression. The structure of a typical decision tree is divided into two components, nodes and branches. Nodes are the major blocks of the machine learning algorithm or events. On the other hand, branches are directional flow, defining the direction of the control flow after the evaluation of a parent node.

Terminologies of a decision tree in machine learning

To understand the entire aspect of the decision tree algorithm used in machine learning, you must know the basic terminologies. Keeping this in mind, we have explained some of the basic terms that you will require later on frequently.

Root nodes: The root node is the parent node at the top of the decision tree. For evaluation, you need to begin at the root node and traverse the entire tree.

Decision nodes: The nodes present under the root node are known as decision nodes, as they are concerned with making decisions and directing the control flow for the child nodes.

Leaf or terminal nodes: These nodes are present at the end of the tree from where branching is impossible. Once the evaluation program reaches the terminal nodes, it shows the outputs, and the entire decision tree is evaluated with a new set of inputs at the root and decision nodes.

Subtree: A subtree is an additional section of the parent decision tree that controls a dependent function of the machine learning algorithm. The nodes present in this tree have derivative functions of the parent tree nodes.

Pruning: Just like you prune the hedges and bushes to maintain their canopy, a decision tree also needs to be pruned so that the outputs generated at the terminal nodes are accurate.

Benefits of using decision tree algorithm in machine learning

The decision tree does not require much effort in the preparation of data and the reprocessing stage compared to other algorithms in machine learning.

A decision tree also does not need data normalization to function, which further reduces the efforts and the chances of errors.

If any data value is not present, it does not impact the formation of the decision tree or the definition of different root and decision nodes.

It is very easy to understand the decision tree and provide a very efficient explanation to others who do not know this algorithm or machine language as a whole.

Conclusion

Although machine learning is difficult, understanding the decision tree and learning about its implementation will make the process easier for every professional. Besides, it can be used for both regression and classification problems. Writing the algorithm for the decision tree is super easy due to the Association of root and decision nodes with each other.